Serverless architecture is a way to build and run applications and services without managing infrastructure.

By Dave Green December 17, 2021

By Dave Green December 17, 2021

- Table of Contents

Technology waits for no one.

When you become accustomed to using a certain tool or architecture, you may find yourself asking, 'Do I have to start all over again when something new comes out?' This can lead to large-scale restructuring or code refactoring, putting you in a difficult situation that may cause you to become careless.

What if we were to end the current migration and start over with a new product a few years later? It's not an easy task and requires research and consideration.

Ultimately, if we are able to provide our customers with excellent service, then it may not make much sense to consider such a significant change. On the contrary, if we reject innovation, we might remain stuck in the past. However, it is precisely at such times that technology can make dramatic leaps toward a better and brighter future.

And this comes to mind when we start seeing terms like "serverless architecture" everywhere.

If thinking about change gives you a headache, I recommend considering the following words: "When it comes to web architecture, composability is the most important thing."

Personally, I think so. Considering how my front-end application is structured and how many organizations are successfully transitioning from traditional monolithic architecture patterns to decoupled microservices architecture patterns, I can see a clear roadmap that leads directly to serverless architecture. By the end of this article, I believe you will understand it as well. Let's analyze what serverless architecture is, along with its advantages and disadvantages.

1. What is Serverless Architecture?

First, I will explain what serverless architecture is.

Serverless architecture is neither a replacement for microservices nor does it mean the absence of servers. In fact, cloud service providers handle the server infrastructure, and microservices architecture and serverless computing can work together within the same application or be used separately.

There are various types of cloud services, and what constitutes serverless architecture can often become ambiguous.

Many people consider serverless to be Function-as-a-Service (FaaS), and in its simplest form, I believe that is indeed true.

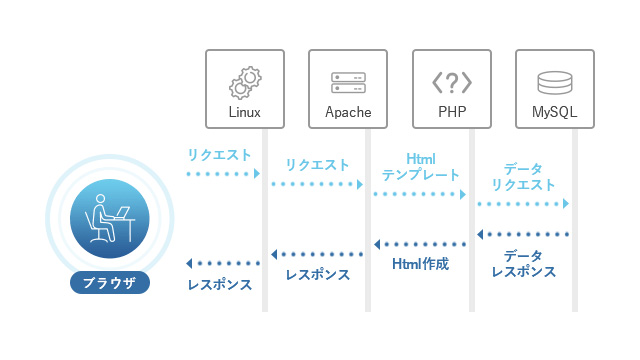

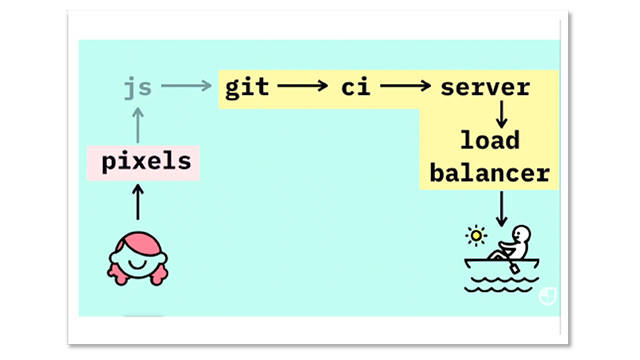

FaaS is a subset of serverless, also known as serverless functions. These functions are executed in response to events, such as when a user clicks a button. Cloud service providers manage the infrastructure on which these functions operate, so you literally only need to write and deploy code. Communication between the frontend and serverless functions is as simple as an API call.

Serverless computing cloud services were first introduced by Amazon Web Services with AWS Lambda in 2014. Other popular FaaS offerings from cloud vendors are as follows.

- Netlify Functions

- Vercel Serverless Functions

- Cloudflare Workers

- Google Cloud Functions

- Microsoft Azure Functions

- IBM Cloud Functions

Cloud vendors offer many services that are often confused with serverless.

- IaaS (Infrastructure-as-a-Service)

- Platform-as-a-Service (PaaS)

- SaaS (Software-as-a-Service)

- BaaS (Backend-as-a-Service)

These technologies are each independent topics, and since this will provide a high-level overview of serverless, I do not intend to explain them in detail. However, one common point is that the cloud service provider takes care of all the infrastructure for the services, so customers do not need to worry about it.

In other words, you can focus solely on the application and customer experience while reducing time, resources, complexity, and costs.

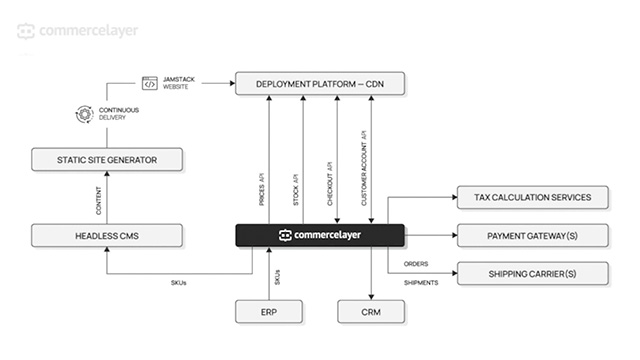

This originally refers to the "A" in Jam (stack), and it is also a fundamental advantage of the MACH (Microservices, API-first, Cloud-native, Headless) ecosystem, where serverless architecture often plays a crucial role.

2. Why is it necessary to adopt a serverless architecture?

Serverless and related cloud services are still relatively new, but every year new technologies are released to the market, achieving astonishing evolution.

However, while serverless computing offers many advantages compared to traditional architectures, it is not a panacea.

As with anything, there are reasons why serverless architecture may not meet the requirements.

2-1 Advantages of Serverless Architecture

Serverless computing, which does not require server management, operates on servers that are managed by cloud service providers. There is no need for server management at all, and it allows for scaling options, optimal availability, and the elimination of idle capacity.

There are many ways that serverless can help reduce costs. In traditional server architecture, it was usually necessary to predict and purchase more server capacity than needed to avoid performance bottlenecks and downtime for applications. With serverless, backend services trigger code to run only once when needed, so cloud service providers charge based on usage. Furthermore, since the provider handles server overhead and maintenance, IT professionals like developers do not need to spend any time on it. Just as cloud computing significantly reduces hardware costs, serverless can also greatly reduce labor costs.

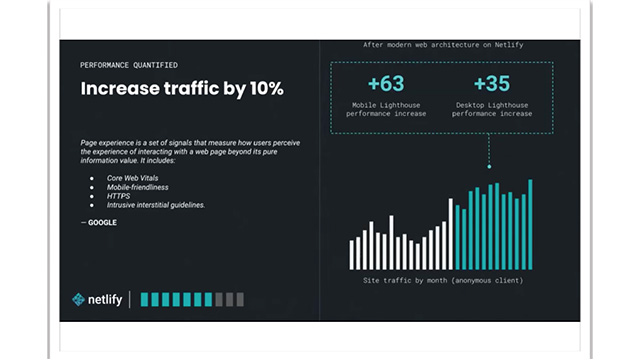

Applications built on serverless architecture can scale infinitely and automatically. There is no need to worry about site downtime or performance degradation due to concentrated access like with fixed servers. Of course, costs will increase as the number of users and usage grows.

I have seen many articles about serverless architecture that mention security as a disadvantage. However, top-tier cloud vendors are dedicated to providing the most secure services with high performance and availability. Since this business model is a key element for them, it is natural that they hire the best talent in the industry to create and maintain their services, and of course, they are also providing absolute best practices. While there are security considerations that developers must take into account for the applications themselves, I believe this is a significant advantage as most of it is handled by industry experts.

The development environment is easy to set up, and there is no need to manage servers, which leads to shorter delivery times and rapid deployment. This is particularly important for a Minimum Viable Product (MVP). Furthermore, since everything is decoupled, you can freely add or remove services without having to change a massive amount of code like in a monolithic application.

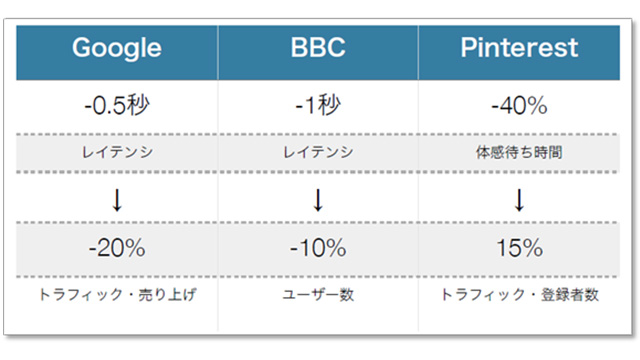

Thanks to Content Delivery Networks (CDN) and edge networks, serverless functions can now be executed on servers closer to end users around the world, reducing latency. Some representative examples of Jamstack edge computing providers include the following.

- Cloudflare Workers

- AWS Lambda@Edge

- Netlify Edge

- Recently, Vercel Edge Functions

2-2 Disadvantages of Serverless Architecture

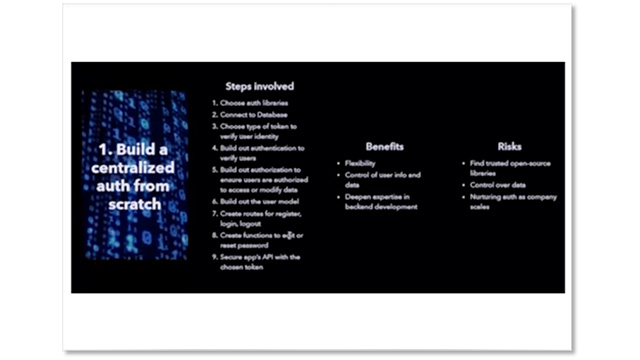

It is possible to choose and use services from various vendors, but since each vendor has a different approach, the simplest method would be to use a single cloud service provider like AWS. Therefore, when migrating to another provider, you will always be completely dependent on a vendor that provides the best service. If there are any infrastructure issues, you will need to wait for them to be resolved.

In many cases, multiple customers' code runs continuously on the same server. This is achieved through a technology called multi-tenancy, which means that customers are tenants who can only access their own share of the server. Therefore, there is a possibility of data leakage due to misconfigurations of that server.

Serverless computing does not run continuously. The first time you invoke a function, it requires a "cold start," meaning that a container needs to be spun up before the function can be executed. This may degrade performance, but the container can continue to run for a certain period after the API call is completed, allowing for a "warm start" without adding latency. Additionally, thanks to edge computing, the cold start issue is becoming less frequent, and this should improve over time.

The visibility of backend processes managed by cloud providers decreases, making debugging more complex. Additionally, in serverless environments, it can be difficult to create replicas for integration testing. However, it's not all bad news. As the serverless ecosystem continues to grow, new platforms and services are being released to the market to address these challenges. One potential solution is Datadog's End-to-end Serverless Monitoring.

3. Final Thoughts

Serverless architecture has various use cases. Many are utilized in conjunction with microservices through REST or GraphQL APIs, focusing on low-computing operations with unpredictable traffic.

Migrating from legacy infrastructure to serverless is undoubtedly very challenging, especially when it requires a complete overhaul of the application's structure. However, the great thing about switching to serverless is that it can be done one step at a time.

This relatively new architecture may not meet all needs right now, but investing time in serverless should ultimately provide many benefits.

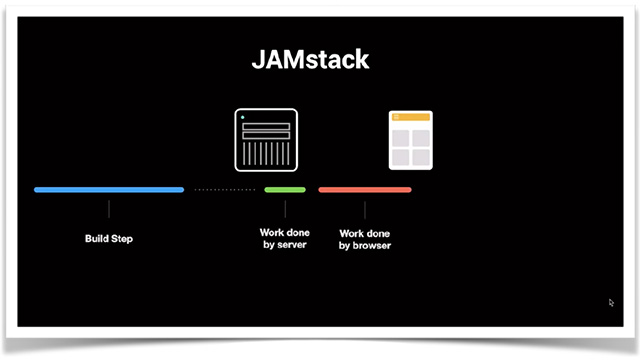

Finally, since Jamstack sites and applications focus on the front end, serverless is the best way to integrate backend functionality.

Due to the lack of experience with these two architectures, companies may certainly hesitate, but Bejamas is here to answer your questions and ensure a smooth transition.