Previous Blog discussed the use cases and benefits of AI synthetic voice tools in multilingual localization, as well as the necessary preparations. This time, we will introduce practical content such as checking and revising the created audio, along with examples of how our company utilizes AI synthetic voice tools.

- Table of Contents

1. Check the audio created with the AI synthesis voice tool

Last time, we explained why it is necessary to check the audio created with AI synthetic voice tools and the need for a checklist to ensure quality across languages.

"In reality, there are still aspects that synthetic voices cannot fully address, such as differences in intonation based on context, unnatural pauses, or a lack of necessary pauses that make understanding difficult.

Especially in multilingual localization, there are many cases where differences in intonation can change the meaning depending on the language, so post-generation checks are essential."

If the checker performs random checks, there is a risk that unnecessary corrections may need to be made based on the checker’s perception, or conversely, that issues may be overlooked with the thought of 'this is acceptable.' To ensure consistent quality across multiple languages, it is crucial to align the checking criteria across languages.

So, specifically, what kind of checks should be performed?

As mentioned above, it is not enough to simply tell the native staff to "check the audio." It is necessary to clearly communicate the aspects that should be checked in order to meet the required quality standards. In this case, one effective method is the use of a "checklist."

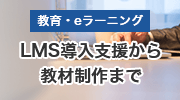

Below is an example of a checklist that we use in our company.

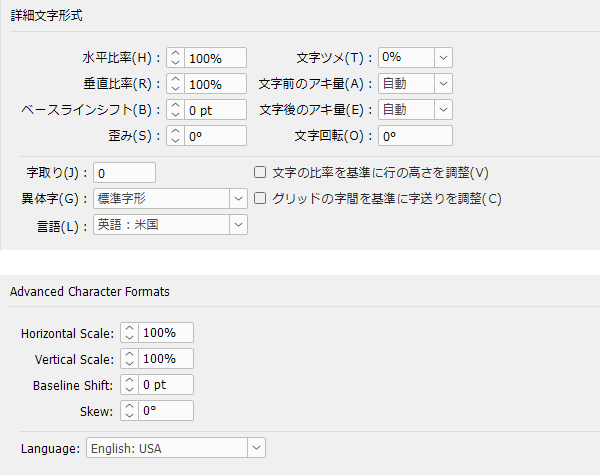

Checklist Structure

This checklist is for cases where synthetic voice generation was performed on the translated text after translation, so there are columns for "Source Text" and "Translated Text."

The structure is designed to check each pair of source text and translated text listed on the left side based on the following criteria.

・Does the "translated text" column match the audio?

・Are there any unclear or unnatural parts that are incomprehensible?

・Is the reading of numerical values correct?

・Is the reading of units correct?

・Is the reading of English abbreviations correct?

It is obviously important to check whether the manuscript and audio match, but the key points are the readings of "numbers," "units," and "English abbreviations."

This is because "numbers" and "units" tend to be misread when using synthetic voice tools. Additionally, less common "abbreviations" may not be pronounced as intended.

Thus, picking up on the aspects where synthetic voice tools struggle and conducting audio checks is effective for ensuring audio quality.

2. Modifications to Audio Created with AI Synthesized Voice Tools

Here, we will introduce how to fix the audio issues found during the audio check.

Example 1: Words whose pronunciation changes depending on the part of speech are not being read correctly

There are limitations to context judgment by AI-generated voice tools, and sometimes misreadings occur. For example, the English word "produce" has two meanings: as a noun (referring to agricultural products like vegetables and fruits) and as a verb (meaning to create). When pronounced, the accent positions differ for each meaning, but when the synthetic voice tool reads "produce," it uniformly interprets it as the verb (meaning to create), regardless of the context.

■Example 1

English: We grow most of our own produce.

(We grow most of our agricultural products ourselves.)

Incorrect audio:

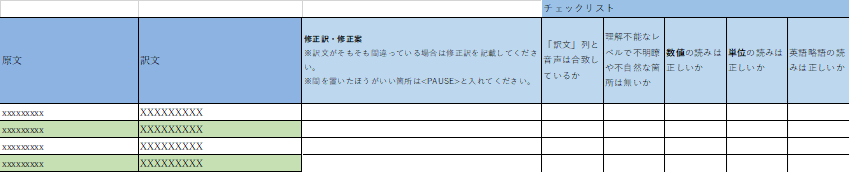

The accent position has become "pro-DUCE." Here is how to correct it. The image below shows an example of the correction.

Modify the accent position to "PRO-duce"

・Raise the pitch of pro: yellow highlight tag group

・Lower the pitch of duce: blue highlight tag group

・Detailed pronunciation corrections: Green highlight

Here is the adjusted audio:

The accent position has been changed to "PRO-duce," and the pronunciation has been correctly adjusted to "produce" instead of "producce."

Example 2: Inconsistency in the reading of abbreviations and proper nouns

In cases of abbreviations or less frequently used specific proper nouns, incorrect pronunciations may be output, or inconsistencies may arise due to different readings depending on the context. Here, we take the abbreviation "ISO" as an example. It goes without saying that it stands for "International Organization for Standardization," and since it is a term commonly used, one might think that errors are unlikely to occur. However, in languages such as the following, there are multiple patterns for reading "ISO." In such cases, inconsistencies in readings are likely to occur.

・English → ISO

・Indonesian → ISO-ISO

In the following example, when written with a space as "ISO 12100" and without a space as "ISO12100", different readings were produced.

■Example Sentence 2

English: I will explain the contents of ISO 12100.

Indonesian: I will explain the content of ISO 12100.

(This will explain the contents of ISO 12100.)

In such cases, it is necessary to standardize the pronunciation through audio correction. This time, we established a policy to pronounce it as "Aiso" in English and "Iso" in Indonesian, and adjusted the audio accordingly.

English:

Indonesian:

3. Case Studies of Multilingual Localization Using AI Synthesized Voices

We will introduce examples of multilingual localization using AI-generated voice at our company.

■Overview

| Industry | Manufacturing industry |

|---|---|

| Subject | Training Materials (PowerPoint Materials + Lecture Audio by Instructors) |

| Language | Japanese → English/Chinese, English → Indonesian |

| Quantity | Approximately 100,000 words/language |

| Production Period | About 4 months |

| Deliverables | PowerPoint materials with audio inserted in 3 languages |

■Points

From the above, the following points emerge.

[Required Tasks]

PowerPoint Materials: Translation

・Lecture audio: text transcription + translation + audio generation in each language

[Notes]

・The production period is short relative to the amount.

Due to the nature of the teaching materials, conveying the meaning is the top priority, and the importance of richness in expression is not high.

As noted in the [Cautionary Points], this project prioritized accuracy over expression and had time constraints. In this case, the use of tools is very effective.

For this project, we have chosen the following methods.

★Voice: AI synthesized voice + verification and correction by a native speaker

★Translation: Machine Translation + Human Editing (Post-Editing)

The key point is to streamline work using tools while ensuring that manual corrections are made to guarantee accuracy.

For voice verification, we will use checklists as introduced in the first half of this article, and corrections will be made on the tool as described above.

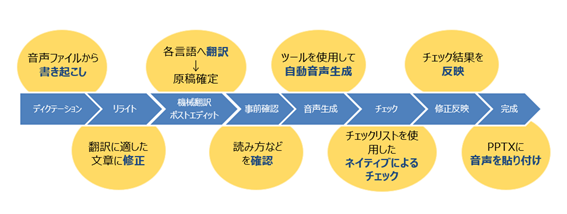

■Workflow

The specific workflow has been established as follows.

・Lecture Audio:

①Transcription of lecture audio (writing out)・Rewrite

②Creating audio scripts in various languages using machine translation

③Voice generation of audio scripts using AI synthetic voice tools.

・PowerPoint Materials:

①Translation into various languages using machine translation

Once the audio for each language and the PowerPoint are completed, insert the audio into the slides to finish.

■Schedule

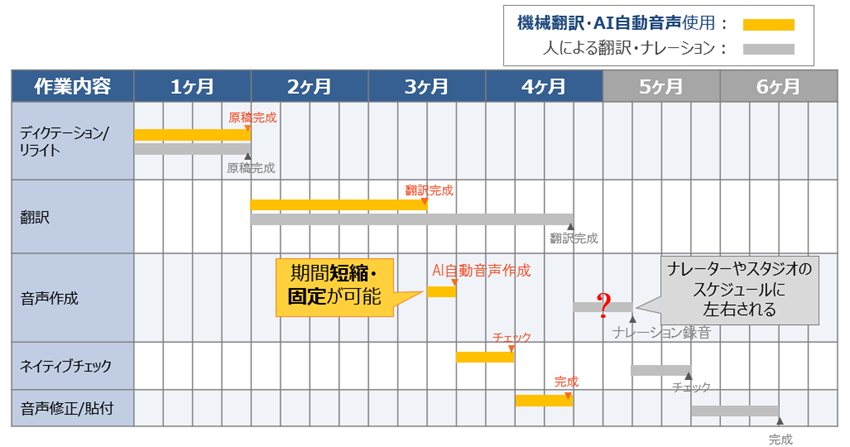

One of the clear benefits of using tools is the schedule. By using machine translation and AI-generated voice tools, the schedule can be significantly shortened. Below is a comparison table of the actual project schedule (orange line) and the estimated schedule (gray line) if translation and voice creation were done manually.

You can easily see that the work period for translation and voice creation has been significantly shortened. In the case of manual work, the schedule tends to exceed 6 months, which is well beyond the 4 months available for this project.

Additionally, there is one more advantage regarding voice creation. In the case of narration recording by a person, there is the challenge of not being able to determine the recording schedule due to the length of the work period as well as the availability of the narrator and studio. In contrast, when using AI-generated voice, as long as you have the tools, you can work regardless of time or place, making it easy to fix the schedule.

By utilizing tools in this way, we have been able to 'shorten and fix' the work period, allowing us to allocate sufficient time for quality improvement efforts, such as native checks, even within the limited timeframe of four months.

4. Summary

In the first half of this article, we introduced effective voice checking and correction methods for using AI synthetic voice tools in multilingual localization.

We looked at points where errors unique to synthetic voices are likely to occur and how to correct them. In the second half, we provided guidance on the points and benefits of using these tools through actual cases where we utilized AI synthetic voice tools (along with machine translation) at our company.

Additionally, the content of this article was also covered in our seminars held on September 7 and 11, 2023. You can download the materials used in the seminar from here. This page also contains other useful materials for manual production, translation, and more, so please take a look.

Related Articles

Utilizing AI Synthesized Voice Tools in Multilingual Localization