Segment alignment of English and Japanese translations is an essential task in building translation memories and organizing bilingual data. Although it is difficult to do manually, anyone can easily achieve high-precision alignment using Google Colab and the natural language processing model LaBSE. This article introduces the entire process of uploading English and Japanese text files, performing automatic sentence-by-sentence alignment, and downloading the results in TMX or TSV format.

- Table of Contents

1. What is "Colab"?

Google Colab is a free cloud-based notebook environment provided by Google. You can run Python code directly in your browser and use GPUs and TPUs for free. No installation is required, and uploading and downloading files, as well as data visualization, are easy to perform, making it ideal for experiments in machine learning and natural language processing. A major advantage is that even programming beginners can proceed by simply running the code cells in order.

2. What is "LaBSE"?

LaBSE (Language-agnostic BERT Sentence Embedding) is a multilingual sentence embedding model developed by Google Research. It supports over 100 languages and its key feature is that semantically similar sentences across different languages are represented as similar vectors (numerical representations).

LaBSE is highly useful for evaluating translation quality, cross-lingual search, and multilingual document alignment. In this project, English and Japanese sentences are vectorized using LaBSE, and the pairs that best match in semantic similarity (cosine similarity) are automatically identified.

2-1. Step 1: Setup and File Upload

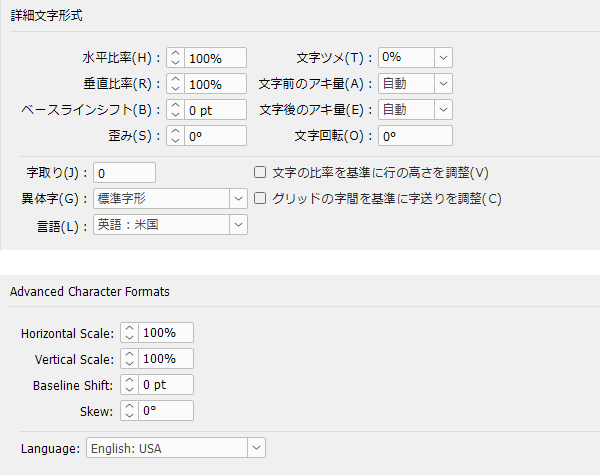

First, set up the library environment on Google Colab. Copy the following code into a new code cell in Colab and run it (please do the same for the following steps).

Next, upload the English and Japanese files.

# ファイルをアップロード

from google.colab import files

print("🔼 英語ファイルをアップロードしてください(.txt)")

uploaded_en = files.upload()

print("🔼 日本語ファイルをアップロードしてください(.txt)")

uploaded_jp = files.upload()

2-2. Step 2: Sentence Segmentation

Split English into sentences using NLTK.

# 英語ファイルの読み込みと文分割

from google.colab import files

import nltk

import io

# 必要なリソースをダウンロード(初回のみ)

nltk.download('punkt_tab')

# 文ごとに分割するためのリスト

en_segments = []

# アップロードされたファイルを読み取り

for filename in uploaded_en:

text = io.StringIO(uploaded_en[filename].decode('utf-8')).read()

# 行単位で処理し、各行を文に分割

for line in text.splitlines():

line = line.strip()

if line: # 空行を無視

sentences = nltk.sent_tokenize(line)

en_segments.extend(sentences)

# 結果確認(必要に応じて)

for i, sent in enumerate(en_segments[:10]):

print(f"{i+1}: {sent}")

print(f"✅ 英語セグメント数: {len(en_segments)}")

Japanese text is split using regular expressions.

# 日本語ファイルの読み込みと文分割

import re

def split_ja(text):

text = text.replace('\r\n', '\n').replace('\r', '\n') # 改行を統一

# 改行ごとに分割 → さらに句点(。!?)で分割

lines = text.splitlines()

segments = []

for line in lines:

line = line.strip()

if not line:

continue

sents = re.split(r'(?<=[。!?])\s*', line)

segments.extend([s.strip() for s in sents if s.strip()])

return segments

jp_segments = []

for name in uploaded_jp.keys():

with open(name, encoding='utf-8') as f:

jp_segments += split_ja(f.read())

print(f"✅ 日本語セグメント数: {len(jp_segments)}")

2-3. Step 3: Automatically Pair Semantically Similar Sentences with LaBSE

Each sentence is vectorized using the LaBSE model to find pairs with similar meanings.

# LaBSE モデルの読み込みとテキストのベクトル化

from sentence_transformers import SentenceTransformer

from tqdm import tqdm

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

model = SentenceTransformer('sentence-transformers/LaBSE')

en_embeddings = model.encode(en_segments, convert_to_numpy=True, show_progress_bar=True)

jp_embeddings = model.encode(jp_segments, convert_to_numpy=True, show_progress_bar=True)

tm_pairs = []

used_en = set()

# 全ての日本語文のベクトルに対して、英語文との類似度を計算

for i, jp_vec in tqdm(enumerate(jp_embeddings), total=len(jp_segments)):

sims = cosine_similarity([jp_vec], en_embeddings)[0]

top_idx = np.argmax(sims) # 最も類似度が高いインデックスを取得

sim_score = sims[top_idx] # その類似度スコア

tm_pairs.append((en_segments[top_idx], jp_segments[i], sim_score))

2-4. Step 4: Export in TMX/TSV Format

The created translation pairs can be saved and downloaded in TMX format, which can be used with translation memory tools, or in TSV format, which is easy to view in Excel and other programs.

The following code can be downloaded in TMX format.

# TMXファイル生成とダウンロード

from xml.etree.ElementTree import Element, SubElement, ElementTree

from google.colab import files

# TMX出力

tmx = Element('tmx', version="1.4")

header = SubElement(tmx, 'header', {

'creationtool': 'ColabTM',

'creationtoolversion': '1.0',

'segtype': 'sentence',

'adminlang': 'en-US',

'srclang': 'en',

'datatype': 'PlainText'

})

body = SubElement(tmx, 'body')

for en, ja, score in tm_pairs:

tu = SubElement(body, 'tu')

tuv_en = SubElement(tu, 'tuv', {'xml:lang': 'en'})

seg_en = SubElement(tuv_en, 'seg')

seg_en.text = en

tuv_ja = SubElement(tu, 'tuv', {'xml:lang': 'ja'})

seg_ja = SubElement(tuv_ja, 'seg')

seg_ja.text = ja

# 保存とダウンロード

tree = ElementTree(tmx)

tree.write('output.tmx', encoding='utf-8', xml_declaration=True)

files.download('output.tmx')

The following code can be downloaded in TSV format.

# TSVファイル生成とダウンロード

import pandas as pd

# tm_pairsからDataFrameを作成

df_tsv = pd.DataFrame(tm_pairs, columns=["English", "Japanese", "Similarity"])

# TSVファイルとして保存

tsv_path = "output.tsv"

df_tsv.to_csv(tsv_path, sep="\t", index=False)

files.download('output.tsv')

3. Supplement

In this example, we focused on the English-Japanese pair, but this method can actually be applied to over 100 languages supported by LaBSE. For instance, alignment processing can be performed similarly for any language pair such as "English and French" or "Chinese and Spanish." By changing the file language and adjusting the segment splitting method to suit each language, it can also be used to create multilingual translation memories. This approach is highly effective for projects aimed at multilingual deployment.

4. Summary

This article explained how to perform high-precision semantic-based segment alignment using LaBSE. It can be widely used for building translation memories, creating training data for machine translation, and preparing parallel corpora. The content is designed to be accessible even for programming beginners, so please feel free to make use of it.

At Human Science, we also support the construction of translation memories, the creation of training data for machine translation, and the preparation of bilingual corpora. For inquiries or requests, please contact us using the inquiry form below.