Since the release of the Japanese version of DeepL in March 2020, its high translation accuracy has been a topic of discussion.

At Human Science, we are advancing verification in various fields such as IT, general business, manufacturing, and healthcare/pharmaceuticals.

In a previous article, we shared the comparison results of DeepL and other engines like Google in relation to business emails and new drug certification application documents.

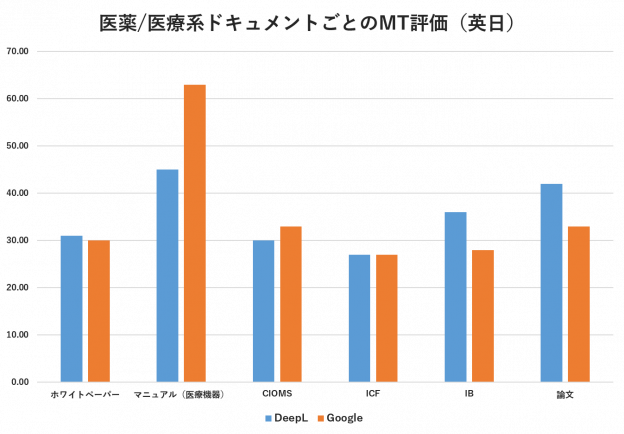

This time, we focused on six types of documents in the medical and pharmaceutical fields: white papers, manuals (for medical devices), CIOMS, ICF, IB, and research papers. In addition to our previous automated evaluations, we also conducted manual evaluations.

Table of Contents

2. Results of Automatic Evaluation BLEU Score

3. Results of Manual Evaluation

1. Evaluation Method

Language Pair: English → Japanese

Target documents: six types including white papers, manuals (medical devices), CIOMS, ICF, IB, and papers.

Evaluation volume: Approximately 1,000 words for each type (around 50 sentences for each type)

Evaluation Criteria: Automatic Evaluation BLEU Score and Human Evaluation

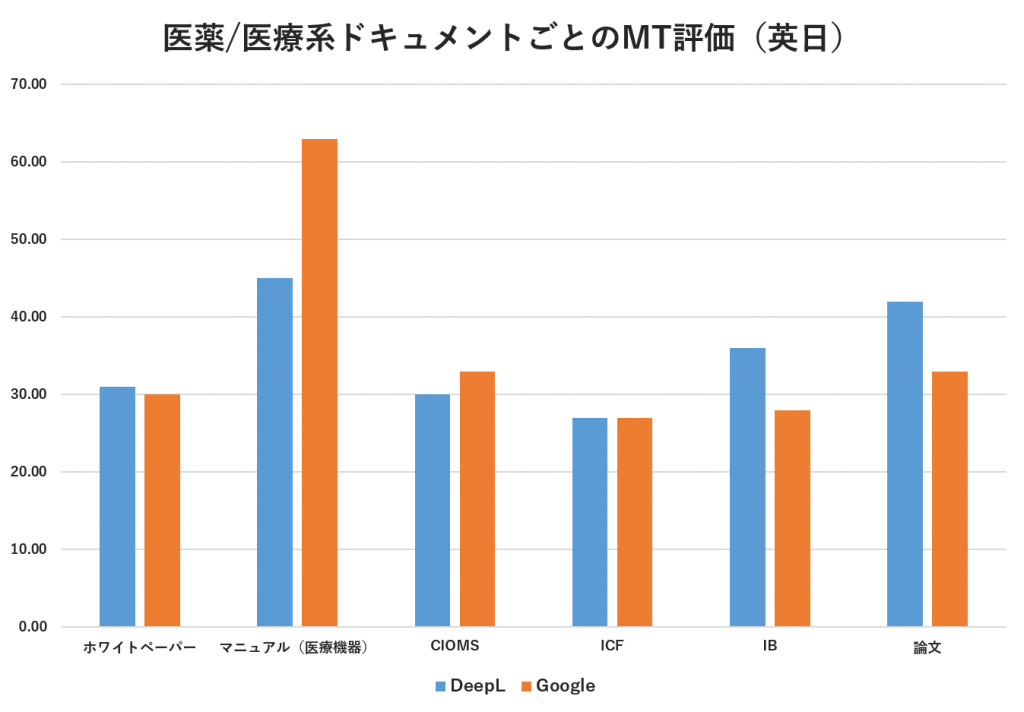

2. Results of Automatic Evaluation BLEU Score

The automatic evaluation BLEU score yielded different results for each document.

・White papers, IB, articles: DeepL has a higher score

・Manuals (Medical Devices), CIOMS: Google has a higher score

・ICF: Comparable to DeepL and Google

A BLEU score of 30 or higher is considered a comprehensible translation of moderate quality.

For documents where the numerical value exceeds 30, there is a possibility of improving work efficiency through machine translation + post-editing instead of human translation.

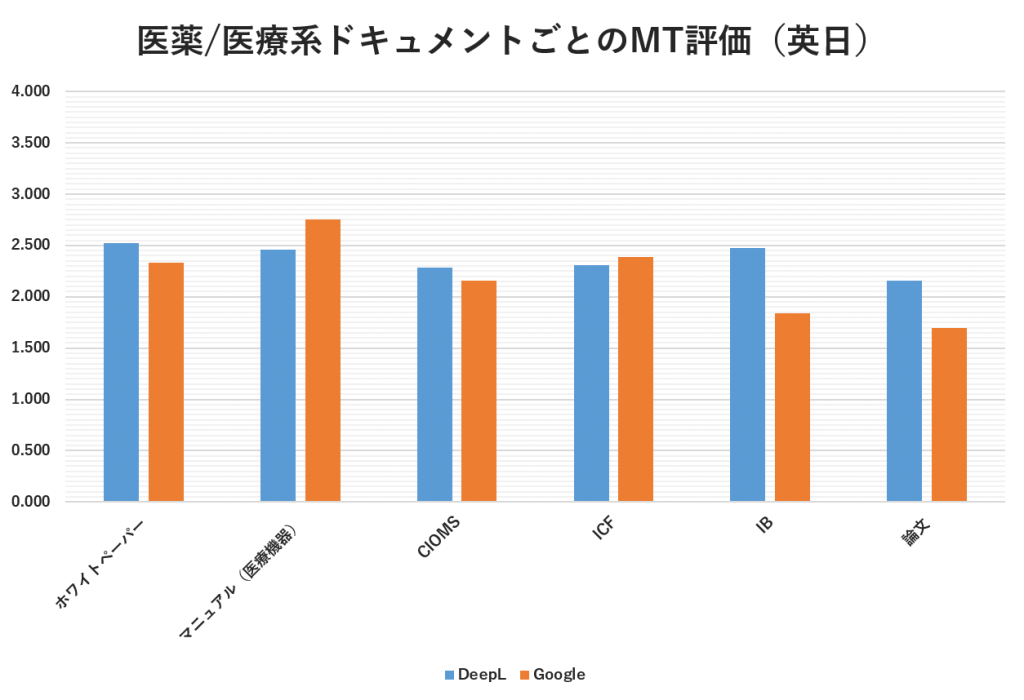

3. Results of Manual Evaluation

What if it is evaluated by a human?

The reviewer in charge of medical and pharmaceutical translation in human sciences evaluated the same document and assigned a score from 1 to 4 for each sentence of the document.

The criteria for scoring are as follows.

4: Translation time can be significantly reduced. Almost no corrections needed. Only punctuation and 1-2 word adjustments are required.

3: Translation time can be shortened. Corrections to words and rearrangements of order are necessary.

2: Cannot shorten translation time. It is helpful as a reference, but translating from scratch is faster.

1: Cannot shorten translation time. Not helpful at all.

・White papers, IB, papers, CIOMS: DeepL scores higher

・Manuals (medical devices), ICF: Google has a higher quality score

Overall, the results were similar to the automated evaluation, but the CIOMS evaluation reversed the results of the automated evaluation, showing that Google's quality was slightly higher for the ICF.

At Human Science, we believe that if the score is 2.5 or higher, we can utilize machine translation to streamline the translation process.

I believe that white papers and manuals (for medical devices) are documents suitable for machine translation with post-editing.

4. Summary

The verification results showed that DeepL often provides higher translation quality than Google.

However, it is not possible to definitively determine whether DeepL or Google is better. The quality varies depending on the document being translated, so it is important to choose a machine translation engine based on effectiveness evaluation.