Since ChatGPT became publicly available on November 30, 2022, its use in business has rapidly expanded. Our company has also focused on its potential from the moment of release and has been working on applying it to annotation tasks. As part of this effort, we published a blog in 2023 titled "Can Annotation Be Done with ChatGPT?"

The conclusion at that time was, "It is more useful than imagined, but since there are omissions in tagging, it is necessary for humans to check it."

Reference link:

Can Annotation Be Done with ChatGPT?

About two and a half years have passed since then, and ChatGPT has evolved to the latest version 4o. So, what kind of tagging (annotation) has become possible with the latest version? In this blog, we have re-examined the task of "tagging text" from the tasks we tested in 2023.

- Table of Contents

1. What is ChatGPT?

There are various types of AI, each specializing in different areas, such as identification AI tailored for specific tasks (e.g., object detection), strategic AI that can defeat world champions in chess, and generative AI like ChatGPT that can naturally converse with people.

So, what kind of AI is ChatGPT?

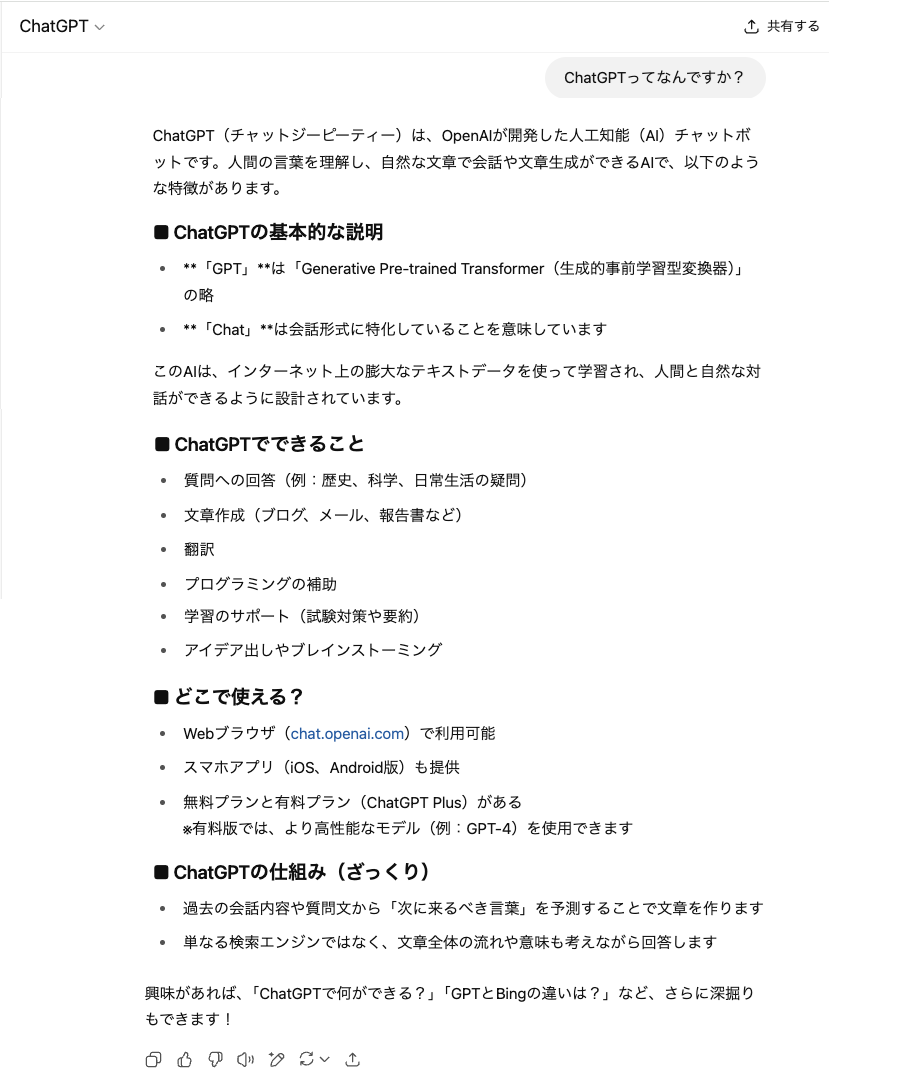

In a 2023 blog post, we asked ChatGPT itself the question, "What is ChatGPT?" and published its answer. Similarly, below is the result of asking ChatGPT for an explanation as of May 2025.

ChatGPT Explanation 2025

Reference: ChatGPT's Own Explanation as of 2023

Compared to the 2023 version, the latest version features a softer writing style, includes concrete examples in its explanations, and shows improvements in both readability and approachability.

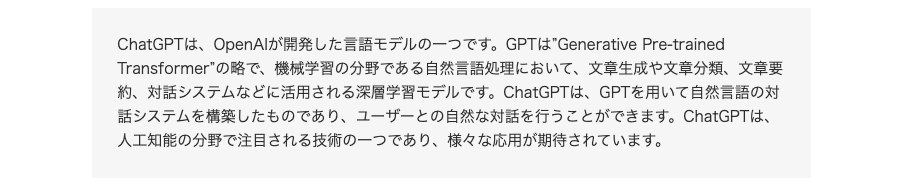

2. What is Annotation (Tagging)?

We also asked ChatGPT to explain this. Initially, the output was in bullet points, but when instructed to summarize the key points concisely, it produced the following text.

Since the 2023 blog used the term "tagging," this article will also use "tagging" from here on.

Reference Blogs:

What is Annotation? Explanation from its Meaning to its Relationship with AI and Machine Learning.

What is Data Labeling? Examples of Data Organization and Utilization.

The Global Market Size of Data Labeling.

3. Conducting and Comparing Tagging Tasks with ChatGPT

In the 2023 verification, we tried the following three tasks.

・Tagging sentences

・Sentiment analysis

・Generating similar sentences

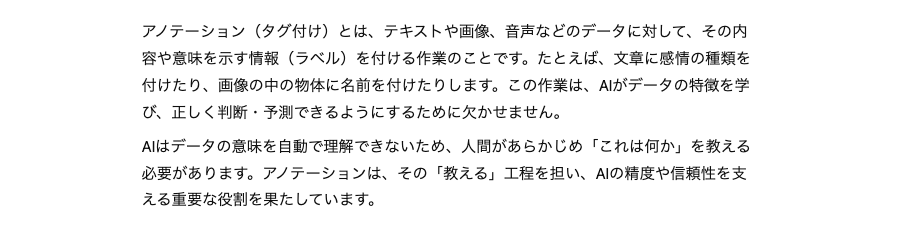

This time, we refocused on the tagging task and conducted verification. The prompts and texts used were those from 2023. The target text is an excerpt from the Wikipedia article on Shohei Ohtani as of March 2023.

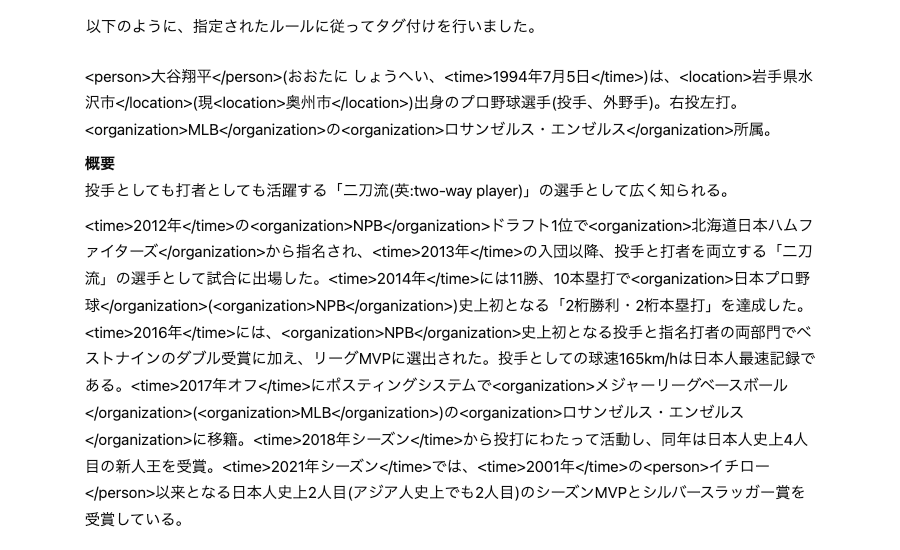

Here are the output results.

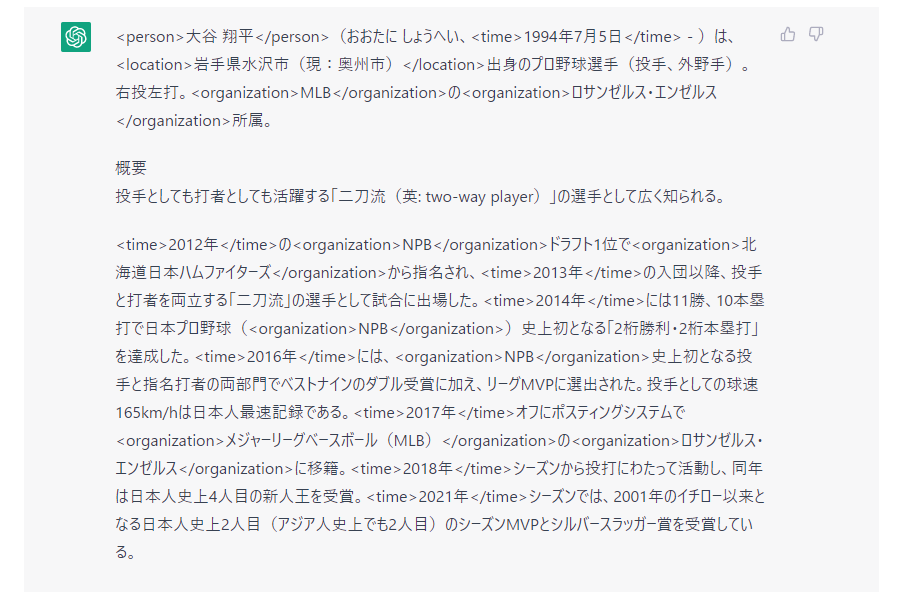

The output at the time of the 2023 blog writing is as follows.

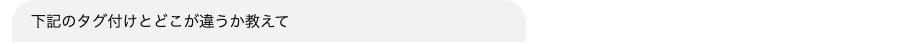

Let's compare the two. ChatGPT was also used for this comparison.

Prompt:

*The 2023 tagging results are entered immediately after this instruction.

Output Results:

*The example that applies to you refers to the tagging from 2023.

The author also reviewed both texts and found that ChatGPT accurately captured the differences. Differences appear in how place names and organization names are segmented, whether the concept of "off-season year" is included in the time, and whether the season is included in the notation of "year season." These are edge cases where either approach could be valid, so even humans might struggle with the judgment in practice.

Except for the fact that it could not tag "Ichiro" and "2001" in the 2023 output, it was able to tag the current targets. On the other hand, regarding the 2025 results, there were no particular omissions or mistakes in tagging.

Taking these points into account, it can be considered that the accuracy of tagging with ChatGPT has improved. On the other hand, as can be seen from the handling of terms like "~off," there are changes in the understanding of words.

4. Summary ~ Is Tagging with ChatGPT Really Viable?

In this verification, it was confirmed that the tagging accuracy has improved compared to 2023, including no omissions of personal names and time expressions. It is considered that sufficiently accurate tagging is possible for texts mainly containing general information.

However, for highly specialized fields (e.g., medical documents) or texts containing industry-specific terms with inconsistent notation, there are believed to be few publicly available examples on the internet, which may lead to decreased accuracy due to biases in the training data. Additionally, regarding how to tag compound expressions such as "Major League Baseball (MLB)," if rules are not established in advance, unexpected outputs may occur.

Therefore, as was the case in 2023, a hybrid approach of "primary tagging by ChatGPT" plus "human verification" is still practical at this point. This method is expected to significantly reduce the workload involved in tagging. That said, a certain amount of human resources is still necessary to ensure final quality assurance. Depending on the task, such as tagging texts in specialized fields, there may be cases where a vast amount of tagged documents must be checked.

If it is difficult to handle everything in-house, we recommend consulting a specialized vendor. Our company has experience in tagging contracts and corporate reports, so if you are having trouble with document tagging, please feel free to consult us, including whether or not to use generative AI such as ChatGPT.

Reference Blog:

Annotation in the Era of Generative AI: Areas That Can and Cannot Be Automated

5. Human Science Training Data Creation, LLM RAG Data Structuring Outsourcing Service

Over 48 million pieces of training data created

At Human Science, we are involved in AI model development projects across a wide range of industries, starting with natural language processing and extending to medical support, automotive, IT, manufacturing, and construction. Through direct business with many companies, including GAFAM, we have provided over 48 million pieces of high-quality training data. From small-scale projects to large long-term projects with a team of 150 annotators, we handle various training data creation, tagging, and data structuring across industries.

Resource management without crowdsourcing

At Human Science, we do not use crowdsourcing. Instead, projects are handled by personnel who are contracted with us directly. Based on a solid understanding of each member's practical experience and their evaluations from previous projects, we form teams that can deliver maximum performance.

Not only for creating training data but also supports the creation and structuring of generative AI LLM datasets

In addition to tagging for data organization and creating training data for identification-based AI, we also support the structuring of document data for generative AI and LLM RAG construction. Since our founding, manual production has been our main business and service, and we provide optimal solutions leveraging our unique expertise and deep knowledge of various document structures.

Secure room available on-site

Within our Shinjuku office at Human Science, we have secure rooms that meet ISMS standards. Therefore, we can guarantee security, even for projects that include highly confidential data. We consider the preservation of confidentiality to be extremely important for all projects. When working remotely as well, our information security management system has received high praise from clients, because not only do we implement hardware measures, we continuously provide security training to our personnel.

In-house Support

We also provide personnel dispatch services for annotation-experienced staff and project managers who match our clients' tasks and situations. It is also possible to organize teams under the client's on-site supervision. Additionally, we support the training of your workers and project managers, the selection of tools tailored to your situation, automation, work methods, and the construction of optimal processes to improve quality and productivity, assisting with any issues related to annotation and tagging.

Text Annotation

Text Annotation Audio Annotation

Audio Annotation Image & Video Annotation

Image & Video Annotation Generative AI, LLM, RAG Data Structuring

Generative AI, LLM, RAG Data Structuring

AI Model Development

AI Model Development In-House Support

In-House Support For the medical industry

For the medical industry For the automotive industry

For the automotive industry For the IT industry

For the IT industry For the manufacturing industry

For the manufacturing industry