Case Studies

We support AI annotation projects for many companies, including GAFAM.

We participate in AI development projects with a thorough security system and high-precision annotation for a wide range of fields, including the medical industry, automotive industry, and IT industry.

Translation, Documentation, and Annotation Achievements

Achievements and Case StudiesCase Studies

CASE 01AI Development Project for Advanced Medical Devices

Medical Device Manufacturing Company

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

10,000 items |

Work Period

|

2 months |

|

Main Takeaways

|

|||

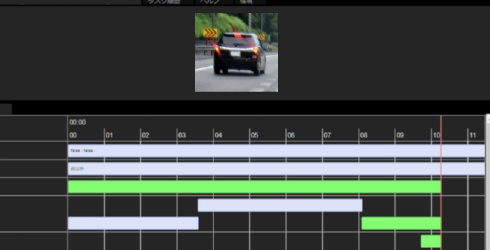

CASE 02Autonomous Driving AI Accuracy Improvement Project

AI Technology Development Manufacturer

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

Over 6,000 items |

Work Period

|

Over 6 months |

|

Main Takeaways

|

|||

CASE 03AI Assistant User Request Understanding Improvement Project

Global IT Company

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

About 450,000 items |

Work Period

|

6 months |

|

Main Takeaways

|

|||

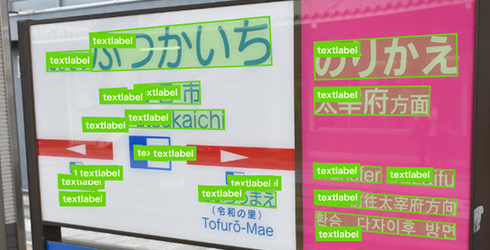

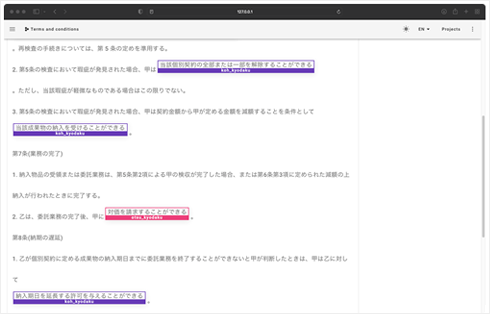

CASE 04Project to Improve OCR Text Recognition Accuracy

Global IT Company

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

22,000 items |

Work Period

|

1,600 hours/month |

|

Main Takeaways

|

|||

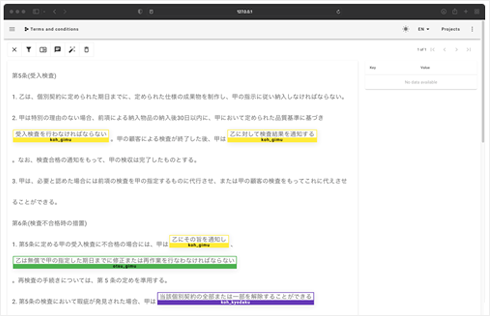

CASE 05AI Automated Contract Content Confirmation Project

Global IT Company

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

About 200 items |

Work Period

|

3 months |

|

Main Takeaways

|

|||

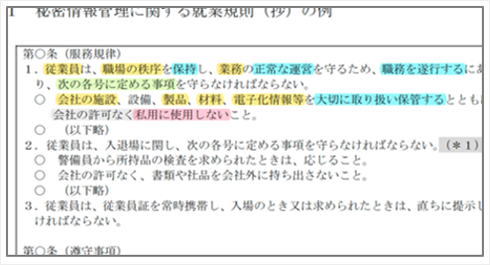

CASE 06Automated Tissue Region Detection AI PoC Project

Medical Device Manufacturer

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

About 2,000 items |

Work Period

|

2 weeks |

|

Main Takeaways

|

|||

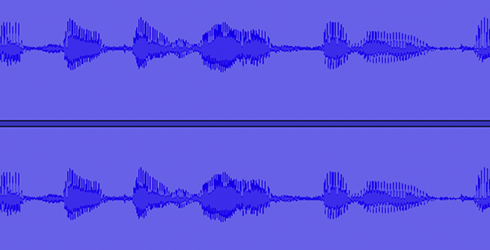

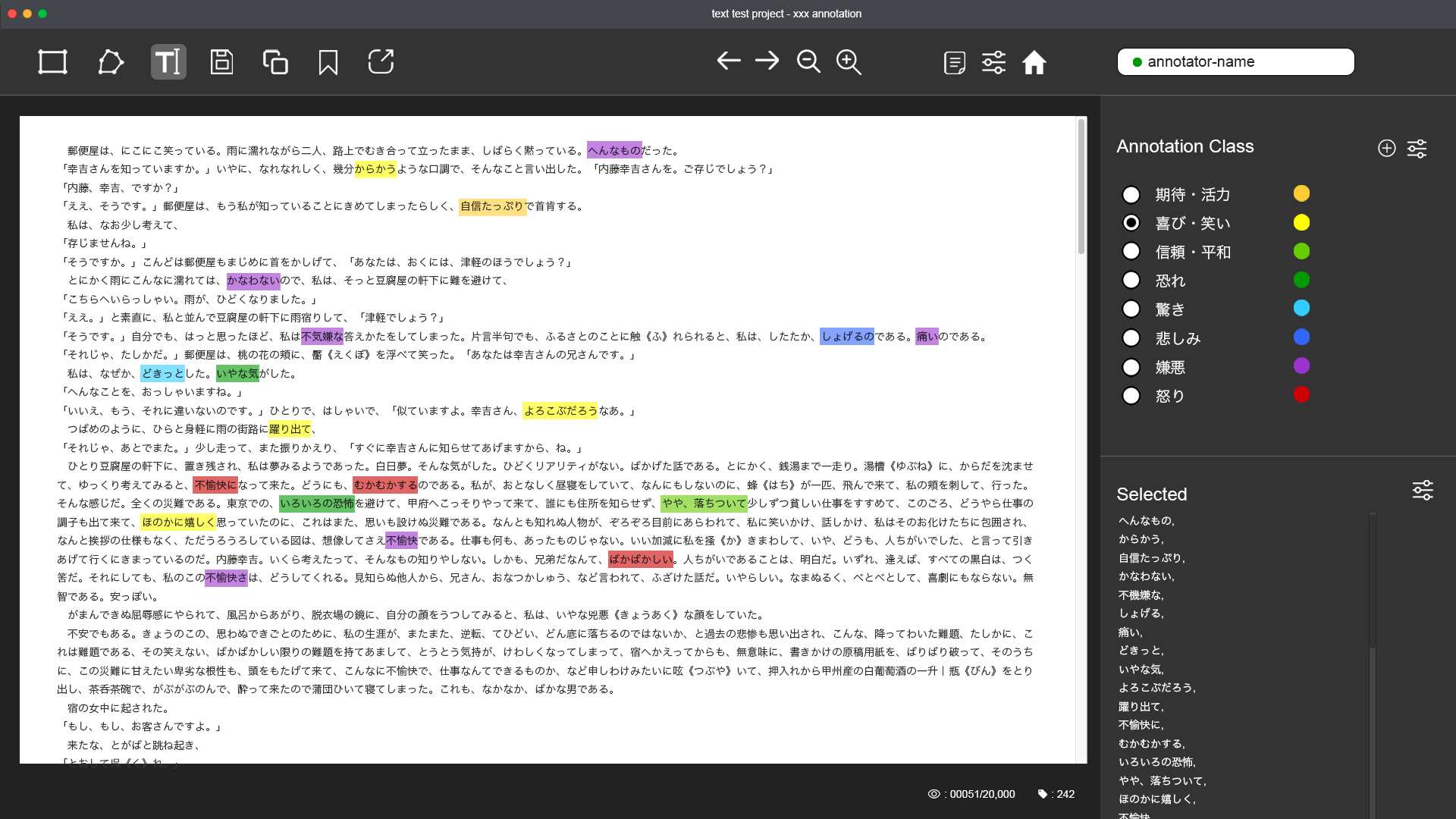

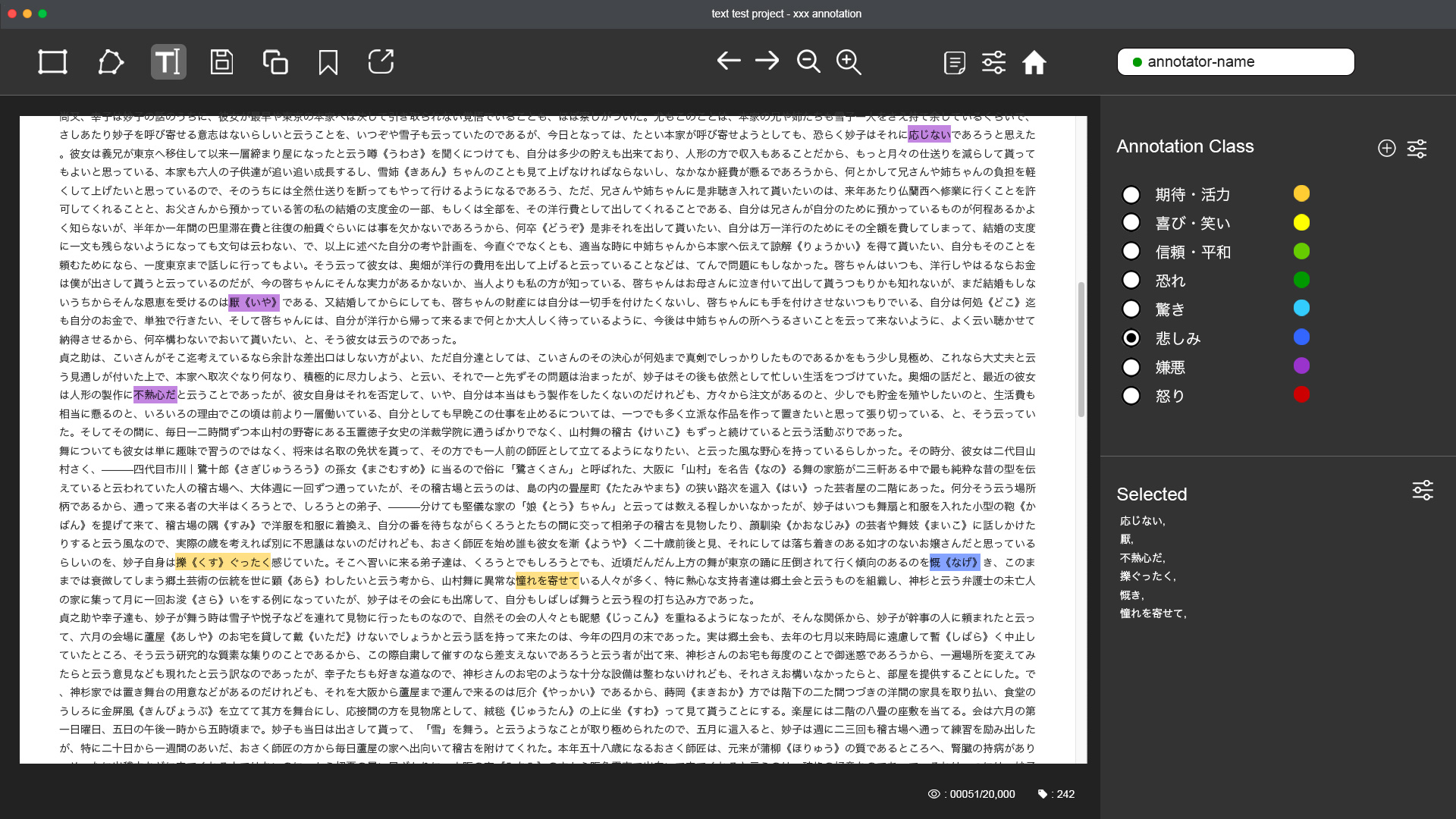

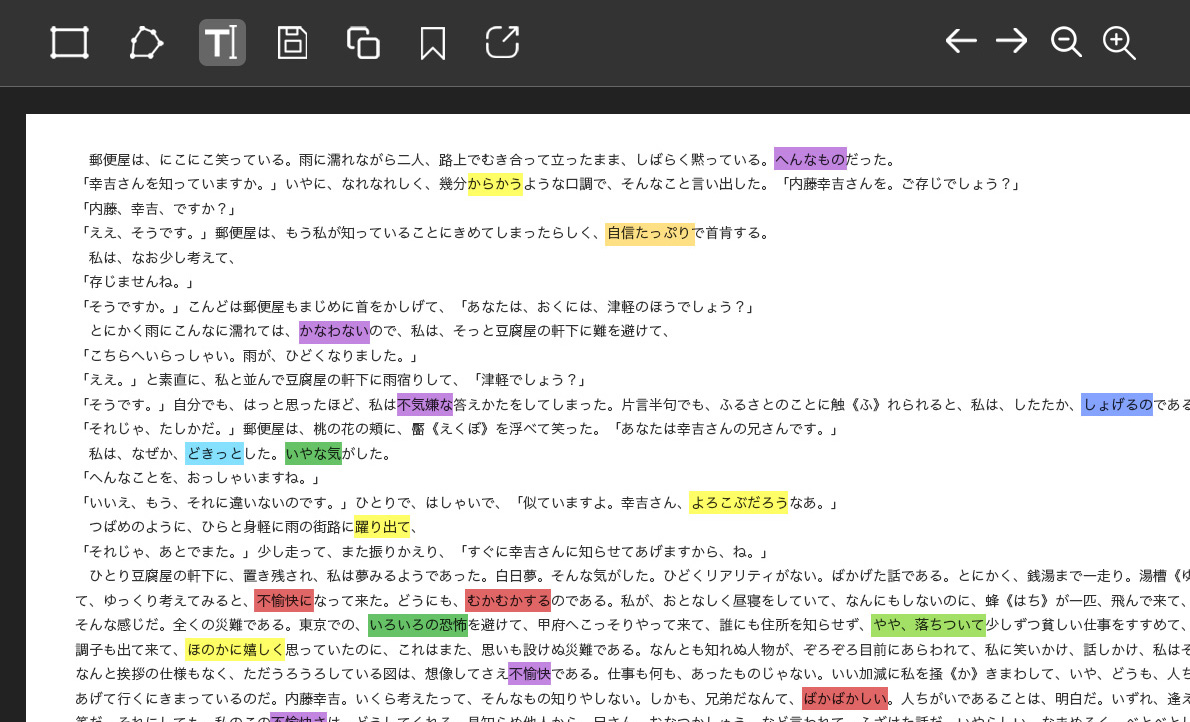

CASE 07Conversation Emotion Analysis AI Project

Content Production IT Company

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

20,000 items |

Work Period

|

About 2 months |

|

Main Takeaways

|

|||

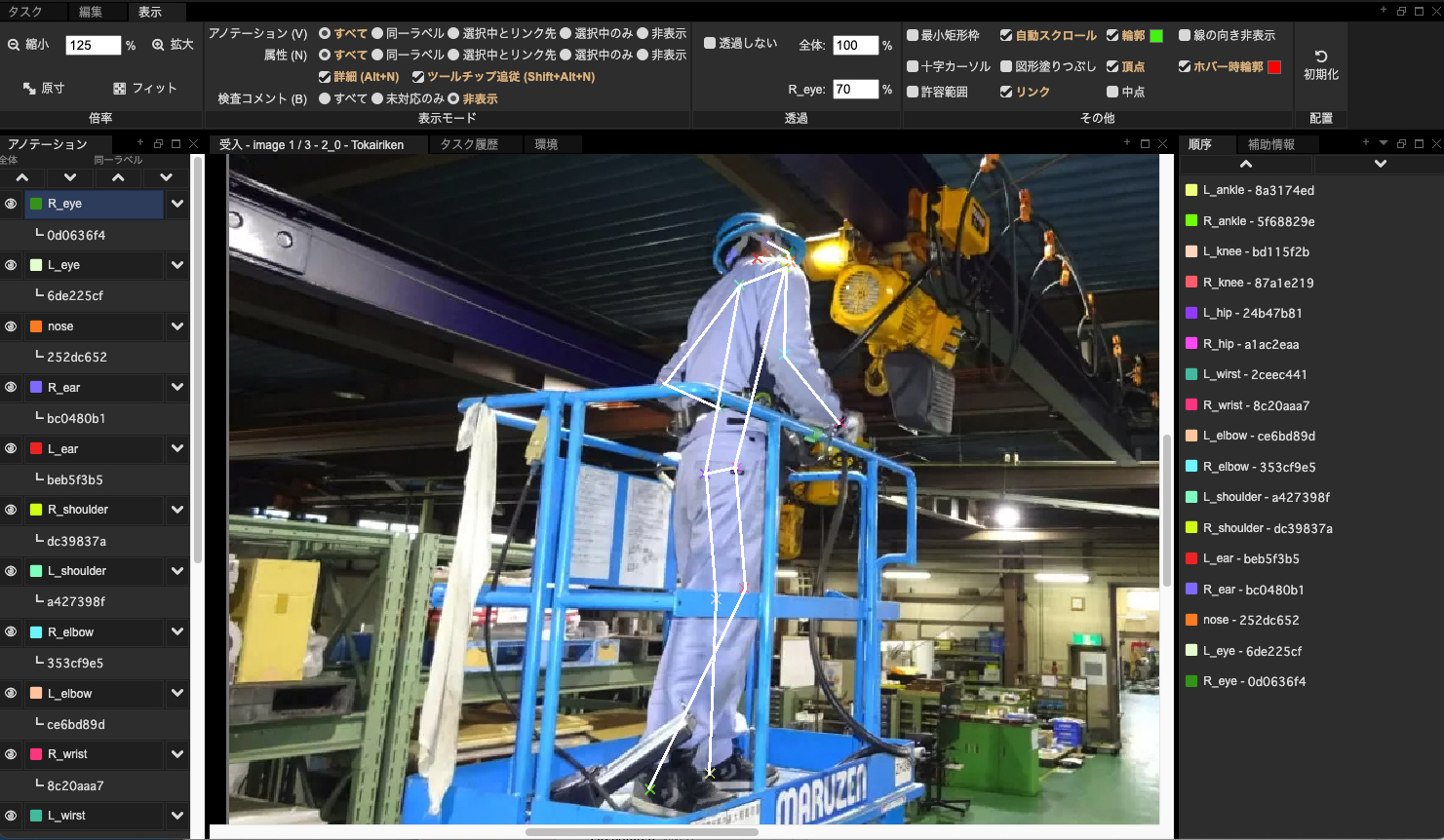

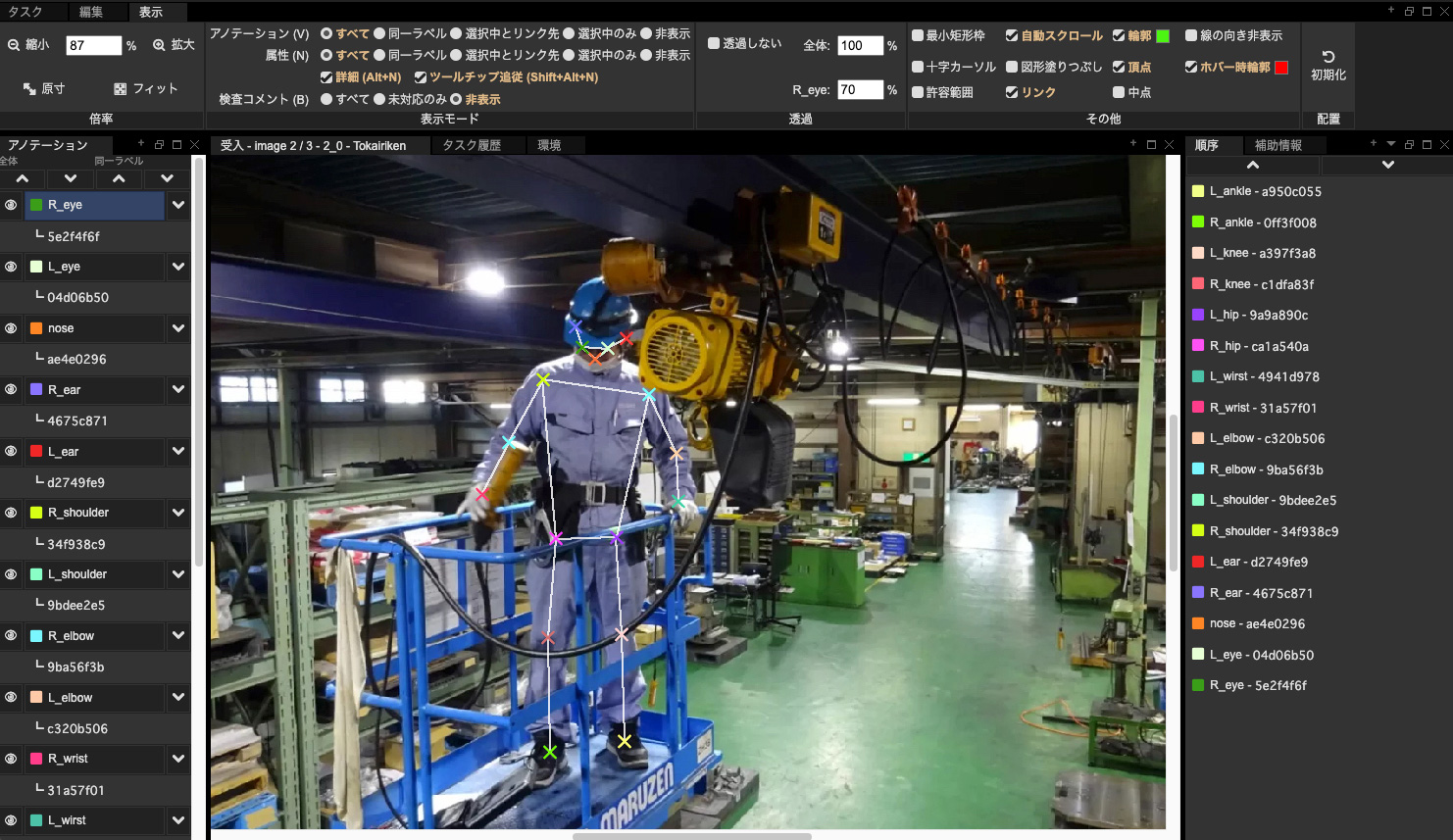

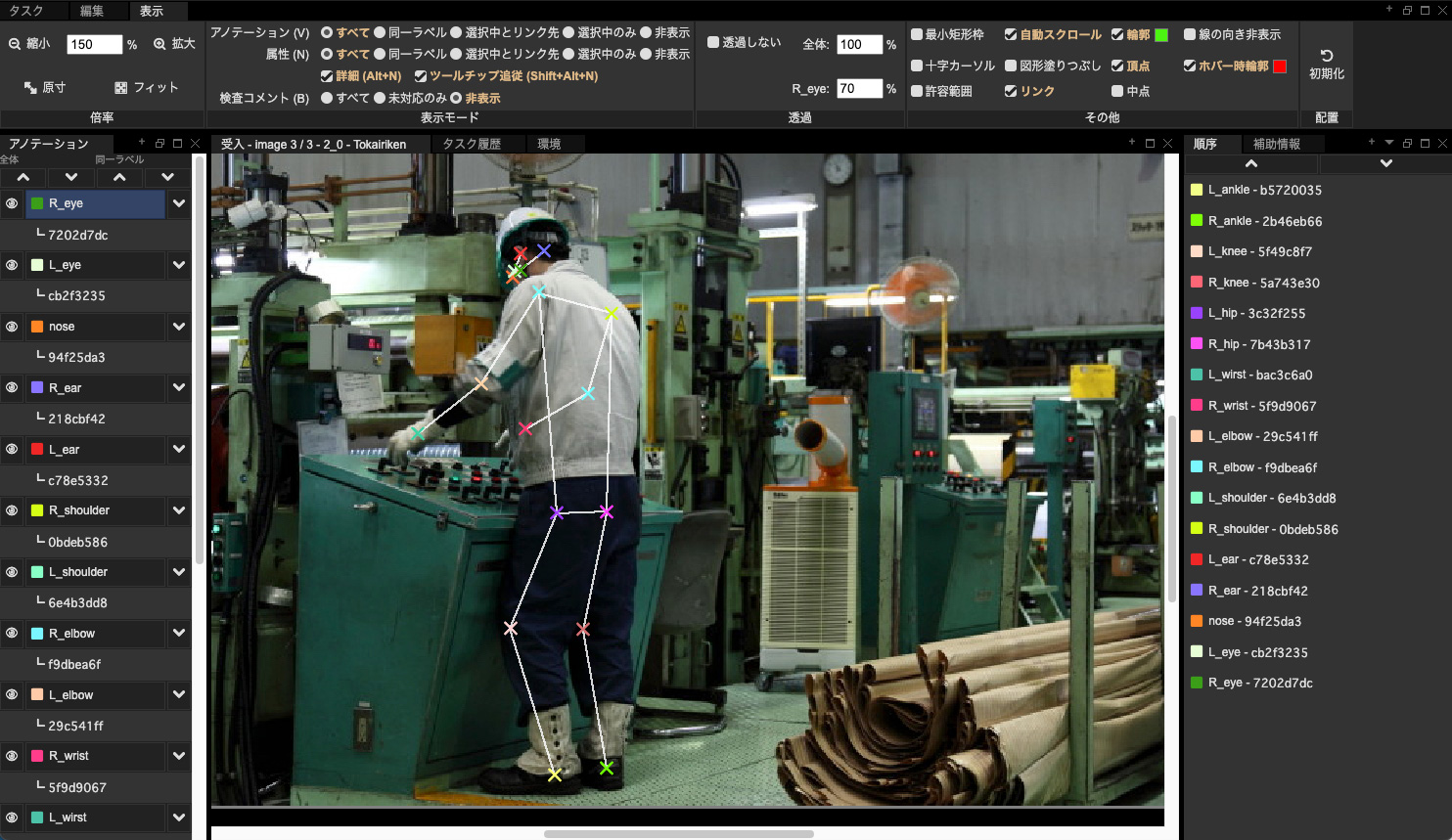

CASE 08Machine Operation Behavior Analysis AI Project

For Machine Tool Manufacturer

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

3,000 files |

Work Period

|

3 weeks |

|

Main Takeaways

|

|||

CASE 11"To be honest, there is nothing but satisfaction with your company."

AI Anomaly Detection Project - Business IT Company

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

5 Types of Tasks Approximately 7,000 Files |

Work Period

|

About 4 months |

|

Main Takeaways

●Customer Interviews are >here |

|||

CASE 12"We received candid feedback even on minor discrepancies and differences in definition recognition."

Comprehensive Machinery Manufacturer

|

Required Tasks

|

|

||

|---|---|---|---|

|

Customer's

Challenges |

|

||

|

Our

Solutions |

|

||

|

Number of Tasks

|

Approximately 3,000 files |

Work Period

|

About 1 month |

|

Main Takeaways

●Customer Interviews are >here |

|||

Other Case Studies

-

Natural Language ProcessingData Generation for AI AssistantProject for improving the accuracy of an AI assistant. We assigned native speakers to generate a large amount of natural text that is likely to be spoken by general users as requests to the AI assistant.

-

Map InformationImproved Map App Route Proposal FeatureProject for improving user satisfaction with a map app. By evaluating whether the app's perceived location information and suggested routes were appropriate, we produced a massive quantity of high-quality training data with more accurate information.

-

OCR TextImproved Optical Text Recognition AccuracyText area extraction from images. Request from an overseas company. We organized a team of annotators within 3 business days, consisting of people who can understand and apply English work manuals and feedback as is. We completed the project within the deadline and without spending time on translation or interpretation.

-

Speech RecognitionCreation of Training Data for Voice ReadingProject for creating training data using multilingual speech synthesis. The project team was composed of native speakers of each language. Voice data in Japanese, English, Chinese, and Korean was created. This is a case where the resources cultivated in our translation business were helpful.

-

Machine Translation EvaluationCreation of Machine Translation Training DataProject for evaluating the output of machine translation and improving the quality of training data. This work contributes to improving translation accuracy by integrating with natural language processing. This is a case where both our translation business experience and knowledge of natural language processing with AI/annotation were utilized.

-

Intent ExtractionSearch Engine Accuracy EvaluationProject for improving a search engine's understanding. Workers evaluated whether the browser was displaying appropriate results for each one of the users' search inputs.

Text Annotation

Text Annotation Audio Annotation

Audio Annotation Image & Video Annotation

Image & Video Annotation Generative AI, LLM, RAG Data Structuring

Generative AI, LLM, RAG Data Structuring

AI Model Development

AI Model Development In-House Support

In-House Support For the medical industry

For the medical industry For the automotive industry

For the automotive industry For the IT industry

For the IT industry For the manufacturing industry

For the manufacturing industry